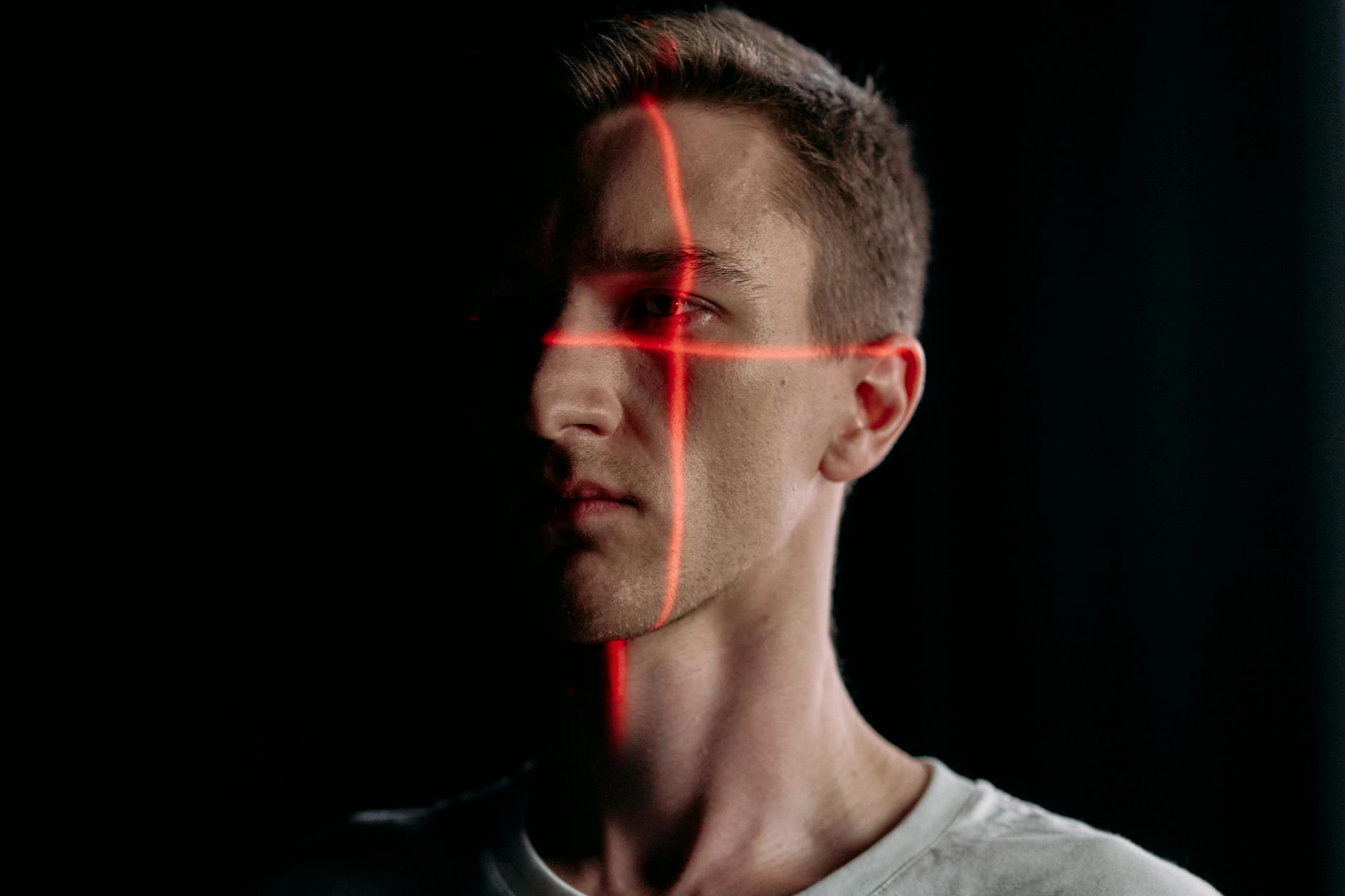

Deepfake technology is changing the way we see and trust digital content. This technology uses artificial intelligence to create realistic fake videos, audio, or images. It has raised serious ethical questions in society.

Understanding these ethical concerns is important. Deepfakes can impact personal privacy, trust in media, and even national security. Let’s explore 15 facts that shed light on the ethical implications of deepfake technology.

Deepfake Technology: 15 Key Ethical Concerns You Need to Know (Short Details):

- Understanding Deepfake Technology: Deepfake technology uses AI to create realistic fake videos, images, and audio that are often indistinguishable from real content.

- The Rise of Deepfake Content: The prevalence of deepfake content is increasing rapidly, with significant amounts circulating on social media and video-sharing platforms.

- Manipulation and Misinformation: Deepfakes are frequently used to spread false information, misleading viewers and causing confusion.

- Privacy and Consent Issues: Deepfakes often violate privacy by using individuals’ images or voices without consent, leading to serious ethical concerns.

- Impact on Trust in Media: Deepfakes undermine public trust in digital media by making it harder to discern what is real from what is fake.

- Threats to Personal Reputation and Safety: Deepfakes can damage personal reputations and pose safety risks through extortion and blackmail.

- The Legal and Regulatory Landscape: Laws and regulations about deepfakes are still developing, with varying degrees of effectiveness worldwide.

- The Role of Social Media Platforms: Social media platforms are working to detect and remove deepfake content but face challenges in enforcement.

- Technological Countermeasures: Detection Tools: AI tools are being developed to detect deepfakes, though these technologies are constantly challenged by advances in deepfake creation.

- Ethical Debate: Freedom of Expression vs. Regulation: There is an ongoing debate about balancing the regulation of deepfakes with protecting freedom of expression.

- Educational Initiatives to Combat Deepfake Impact: Digital literacy programs aim to educate the public on recognizing and reporting deepfakes.

- Potential Positive Uses of Deepfake Technology: Deepfakes can be used positively in art and education, providing creative and engaging content.

- Corporate and Institutional Responsibilities: Corporations and institutions are beginning to adopt guidelines to manage and mitigate the risks associated with deepfakes.

- The Future of Deepfake Technology: Ethical Predictions: The future of deepfake technology involves more sophisticated tools and ongoing ethical debates.

- Steps Individuals Can Take to Protect Themselves: Individuals can protect themselves by being cautious online, staying informed, and reporting suspicious content.

1. Understanding Deepfake Technology

Deepfake technology uses AI, specifically deep learning and generative adversarial networks (GANs), to create realistic fake content. This technology can produce videos, images, and audio that look and sound real.

Many people might not be able to tell the difference. There are both positive and negative uses for deepfakes. While some creators use them for entertainment or art, others use them for harmful purposes. This misuse raises many ethical concerns.

Deepfake technology is not just for experts anymore. With user-friendly apps and software, anyone can create a deepfake. This easy access makes it harder to control and manage its impact.

Read more: Deepfake technology: What is it, how does it work?

2. The Rise of Deepfake Content

The amount of deepfake content online is growing fast. In 2020, there were over 145,000 deepfake videos, a number that has only continued to rise. This shows how quickly this technology is spreading.

Most deepfake content is found on social media platforms and video-sharing sites. These platforms make it easy for deepfakes to go viral, reaching millions in a short time.

The more common deepfakes become, the harder it is for people to trust what they see and hear online. This erosion of trust is a major ethical issue in the digital age.

3. Manipulation and Misinformation

Deepfakes are often used to spread false information. They can be manipulated to show people saying or doing things they never did. This can mislead the public and cause confusion.

Political deepfakes have already been used to try and influence elections. Fake videos of politicians have been spread to damage reputations or sway opinions.

The use of deepfakes for misinformation is dangerous. It can create mistrust in political processes, affect public opinion, and even incite violence.

Read more: How Deepfake Videos Are Used to Spread Disinformation

4. Privacy and Consent Issues

Deepfakes often involve the use of someone’s image or voice without their permission. This is a clear violation of privacy. Many people have been targeted with fake content made without their knowledge.

One of the most harmful types of deepfakes is fake pornography. These videos are often made using the faces of celebrities, but sometimes they target private individuals. This can cause severe emotional distress.

Consent is crucial in any use of someone’s image or voice. When deepfakes are made without consent, it raises serious ethical questions about rights and privacy.

Read More: Top 15 Fun Facts About the Human Brain You Didn’t Know

5. Impact on Trust in Media

Deepfakes make it harder to trust digital media. With fake content spreading quickly, people are finding it more challenging to know what is real and what is not.

The rise of deepfakes can undermine trust in news outlets. When deepfakes are presented as real news, they can deceive viewers and readers.

Many experts worry that deepfakes will make it harder for genuine journalism to be trusted. This lack of trust could damage the credibility of all digital content.

6. Threats to Personal Reputation and Safety

Deepfakes can ruin personal reputations. A single fake video can spread rapidly online, damaging careers, relationships, and lives.

Some people have faced threats or blackmail because of deepfakes. In many cases, these threats have led to real-world harm.

The psychological impact of being targeted by a deepfake can be severe. Victims often feel powerless, knowing that their image or voice has been used against their will.

7. The Legal and Regulatory Landscape

Laws and regulations about deepfakes vary widely around the world. Some countries have started to pass laws to regulate deepfake content, but enforcement is still a challenge.

One of the biggest issues is jurisdiction. Deepfakes can be created in one country and spread in another, making it hard to enforce laws.

Legal experts believe more comprehensive laws are needed. There is a push for international cooperation to address the challenges posed by deepfakes.

Read More: Top 15 Innovations in Renewable Energy

8. The Role of Social Media Platforms

Social media platforms play a significant role in the spread of deepfakes. Many are working to detect and remove harmful content, but their efforts are often not enough.

Platforms like Facebook and Twitter have introduced policies to ban or label deepfake content. However, these policies are still in the early stages and often lack enforcement.

Social media companies have a big responsibility to fight deepfake misuse. Many people believe they should do more to protect users and maintain trust.

9. Technological Countermeasures: Detection Tools

Detecting deepfakes is a challenge. Some AI tools can identify fake content, but these are not always reliable. New detection methods are needed to stay ahead of the technology.

Tech companies and research institutions are developing advanced AI tools to detect deepfakes. However, creators of deepfakes are also getting better at hiding their tracks.

The battle between deepfake creators and detection tools is ongoing. It is a technological race that will require constant innovation and adaptation.

10. Ethical Debate: Freedom of Expression vs. Regulation

There is a big debate over whether deepfakes should be regulated or if this would restrict freedom of expression. Some argue that regulations are needed to prevent harm, while others worry about censorship.

Finding a balance between regulation and freedom is complex. Every approach comes with its own challenges and potential drawbacks.

Many believe that both regulation and education are necessary. It’s about promoting responsible use while protecting rights.

11. Educational Initiatives to Combat Deepfake Impact

Digital literacy is crucial in fighting deepfakes. Educating people on how to spot and report fake content is essential. Governments, NGOs, and tech companies are working on programs to teach the public about deepfakes.

These initiatives aim to create a more informed and cautious audience. Encouraging critical thinking is key. People need to question what they see and verify information before trusting it.

Read more: Best Practices and Strategies for Schools to Combat Deepfakes

12. Potential Positive Uses of Deepfake Technology

Not all uses of deepfakes are harmful. In art and entertainment, they can create amazing visual effects and tell new kinds of stories.

Deepfakes can also be used for education, allowing historical figures to “come to life” and share knowledge in engaging ways.

Even in these positive uses, ethical considerations are important. Consent and transparency are essential to maintain trust.

13. Corporate and Institutional Responsibilities

Corporations and institutions have a role in managing deepfake risks. They need to adopt ethical guidelines and best practices.

Many organizations are starting to develop policies on deepfake use and creation. This includes clear rules about consent and authenticity.

Businesses must also educate their employees about the risks and responsibilities associated with deepfakes. Awareness and training can prevent misuse.

Read more: A Look at Global Deepfake Regulation Approaches

14. The Future of Deepfake Technology: Ethical Predictions

Experts believe that deepfake technology will continue to advance. This means that ethical challenges will grow alongside it.

There are predictions that deepfakes could become even more convincing, making detection harder. New strategies will be needed to combat this.

The future will likely see new laws, better tools, and more awareness. But the ethical debates around deepfakes are far from over.

15. Steps Individuals Can Take to Protect Themselves

Individuals can protect themselves by being cautious about what they share online. Limiting personal information can reduce the risk of being targeted.

Staying informed about deepfakes is also crucial. Learning to spot the signs of a fake can help people avoid falling victim.

Reporting suspicious content helps others too. Everyone has a part to play in keeping the digital world safe and trustworthy.

Read More: 15 Most Influential Scientists of All Time

Final note:

Deepfake technology presents both opportunities and challenges. While it offers creative possibilities, it also poses serious ethical questions.

To address these issues, we need a mix of regulation, education, and technological innovation.

By understanding the ethical implications of deepfakes, we can work towards a safer and more trustworthy online environment.

15 FAQs ( Frequently Asked Questions):

-

What is deepfake technology?

Deepfake technology uses AI to create convincing fake videos, images, or audio that are hard to distinguish from real content.

-

How does deepfake technology work?

Deepfake technology uses generative adversarial networks (GANs) and deep learning to generate realistic fake content from existing media.

-

What are the main ethical concerns with deepfakes?

The main concerns include privacy violations, misinformation, and the potential for damage to personal reputations.

-

How are deepfakes used to spread misinformation?

Deepfakes can create realistic but fake content that misleads viewers, often used to manipulate public opinion or political outcomes.

-

What privacy issues are associated with deepfakes?

Deepfakes can be made without individuals’ consent, leading to unauthorized use of their likenesses or voices.

-

How do deepfakes impact trust in media?

Deepfakes make it difficult to verify the authenticity of media, which can undermine public trust in news and information sources.

-

What threats do deepfakes pose to personal safety?

Deepfakes can be used for blackmail or harassment, potentially leading to personal and professional harm.

-

Are there any laws regulating deepfakes?

Laws are still developing, with some countries implementing regulations to address deepfake content, but enforcement remains a challenge.

-

How are social media platforms handling deepfakes?

Platforms are implementing detection and removal policies, but the effectiveness of these measures varies.

-

What tools are available to detect deepfakes?

AI-based detection tools are being developed to identify deepfakes, though they face challenges as deepfake technology advances.

-

What is the debate around regulating deepfakes?

The debate centres on balancing the need for regulation to prevent harm while protecting freedom of expression.

-

How can digital literacy help with deepfakes?

Educational initiatives aim to improve digital literacy, helping people recognize and report deepfake content effectively.

-

Can deepfakes be used positively?

Yes, deepfakes have potential positive uses in art and education, creating engaging and innovative content.

-

What responsibilities do companies have regarding deepfakes?

Companies are encouraged to adopt ethical guidelines and best practices to manage deepfake risks and protect users.

-

What are future trends in deepfake technology?

Future trends include more advanced deepfake tools and ongoing ethical discussions about their impact and regulation.